Ultimate Guide: 7 Pro Ways To Load Data Now

Introduction

Loading data efficiently is a crucial aspect of data analysis and management. Whether you’re a data scientist, analyst, or simply someone working with datasets, understanding the best practices for loading data can greatly enhance your workflow and productivity. In this ultimate guide, we will explore seven professional ways to load data, ensuring optimal performance and seamless integration into your projects. By the end of this article, you’ll have a comprehensive understanding of the most effective methods to load data and make informed decisions based on your specific needs.

Method 1: Database Connections

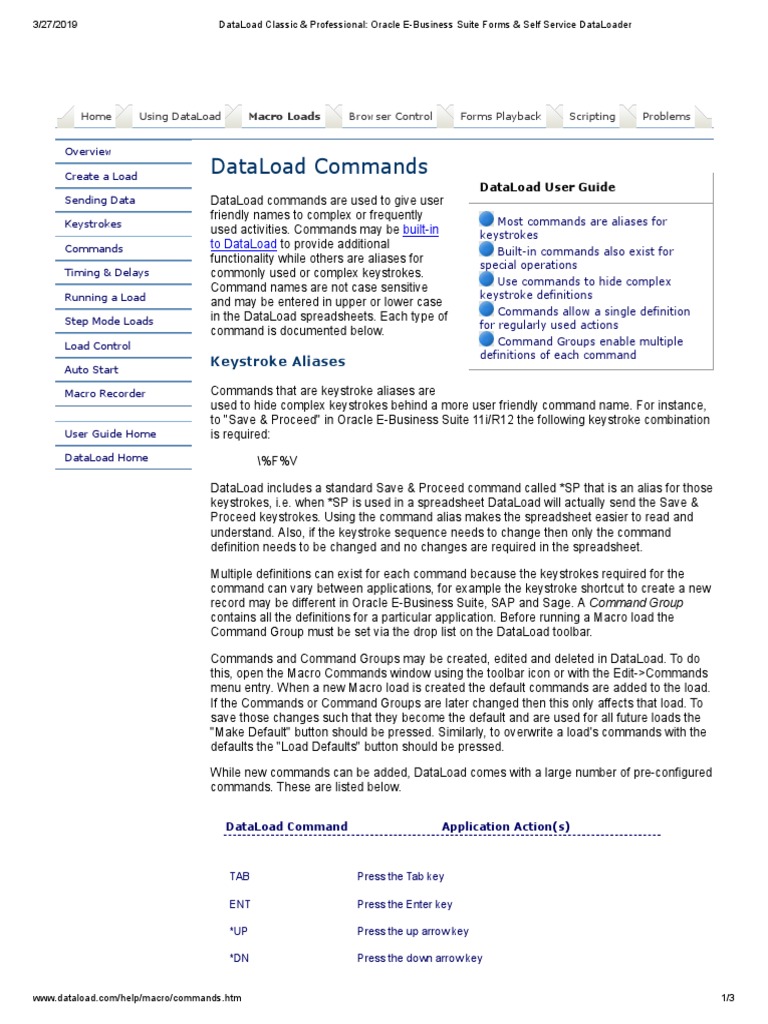

One of the most common and efficient ways to load data is by establishing a direct connection to a database. Databases, such as MySQL, PostgreSQL, or MongoDB, store structured and unstructured data, making them ideal for large-scale data storage and retrieval. Here’s a step-by-step guide to loading data from a database:

Step 1: Choose the Right Database

- Select a Database Type: Decide on the type of database that best suits your data and project requirements. Consider factors like scalability, data structure, and the nature of your queries.

- Popular Options: MySQL, PostgreSQL, and MongoDB are widely used and offer excellent performance and flexibility.

Step 2: Establish a Connection

- Install Database Driver: Ensure you have the appropriate driver or connector installed for your chosen database. This allows your programming language or framework to communicate with the database.

- Connection Parameters: Gather the necessary connection details, such as the database host, port, username, and password.

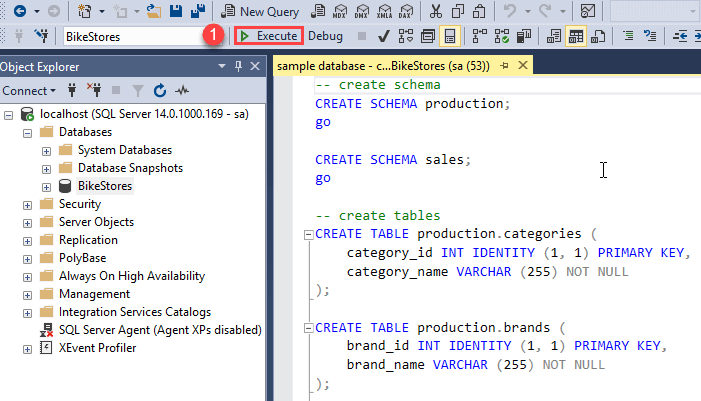

Step 3: Execute SQL Queries

- Structured Query Language (SQL): SQL is a powerful language used to interact with relational databases. Write SQL queries to retrieve specific data from the database.

- Example Query: To fetch all records from a table named “customers,” you can use the following SQL query:

SELECT * FROM customers;

Step 4: Load Data into Your Application

- Data Retrieval: Execute the SQL query using your programming language or framework’s database library. This will fetch the data from the database.

- Handling Results: Process the retrieved data and integrate it into your application or analysis. You can use data structures like lists, dictionaries, or data frames to store and manipulate the loaded data.

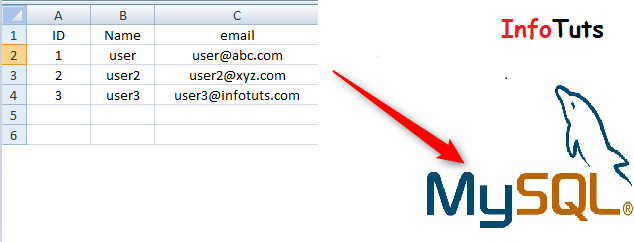

Method 2: CSV and Excel Files

CSV (Comma-Separated Values) and Excel files are widely used formats for storing and exchanging data. They are simple, human-readable, and compatible with various software tools. Here’s how to load data from these file formats:

Step 1: Choose the File Format

- CSV: CSV files are text-based and use commas to separate values. They are lightweight and easy to work with.

- Excel: Excel files (.xlsx or .xls) offer more complex data organization with multiple sheets and formatting options.

Step 2: Read the File

- CSV Reading: Use built-in functions or libraries in your programming language to read CSV files. For example, in Python, you can use the

csvmodule. - Excel Reading: Excel files can be read using libraries like

pandasin Python orread_excelfunction in R.

Step 3: Load Data into Your Application

- Data Parsing: Parse the file content to extract relevant data. Ensure proper handling of missing values, data types, and column names.

- Data Structure: Store the loaded data in appropriate data structures, such as data frames or lists, depending on your analysis needs.

Method 3: API Integration

Application Programming Interfaces (APIs) provide a powerful way to access and load data from external sources. Many web services and platforms expose APIs that allow you to fetch data programmatically. Here’s a guide to loading data via APIs:

Step 1: Identify the API

- Research APIs: Identify APIs relevant to your data needs. Popular APIs include those for weather data, financial data, social media platforms, and more.

- API Documentation: Review the API documentation to understand its endpoints, parameters, and response formats.

Step 2: Make API Requests

- HTTP Requests: Use libraries like

requestsin Python orhttrin R to send HTTP requests to the API. This involves specifying the API endpoint, providing necessary parameters, and handling authentication if required. - Response Handling: Parse the API response to extract the data you need. This may involve handling JSON or XML data formats.

Step 3: Load Data into Your Application

- Data Processing: Clean and preprocess the loaded data to ensure it meets your analysis requirements.

- Data Storage: Decide on the best data structure to store the API-retrieved data, considering factors like memory usage and data access patterns.

Method 4: Web Scraping

Web scraping involves extracting data from websites by parsing HTML or XML content. While it can be a powerful method, it’s important to respect website terms of service and avoid overloading servers. Here’s a brief overview:

Step 1: Identify Target Websites

- Website Selection: Choose websites that provide relevant data and have a structure suitable for scraping.

- Respect Website Policies: Ensure you are not violating any terms of service or overloading the website’s servers.

Step 2: Parse HTML or XML

- HTML Parsing: Use libraries like

BeautifulSoupin Python orrvestin R to parse HTML content and extract data. - XML Parsing: For XML-based websites, libraries like

xml.etreein Python orXMLpackage in R can be used.

Step 3: Load Data into Your Application

- Data Cleaning: Clean and validate the scraped data to ensure accuracy and consistency.

- Data Storage: Decide on the appropriate data structure to store the scraped data, considering its size and structure.

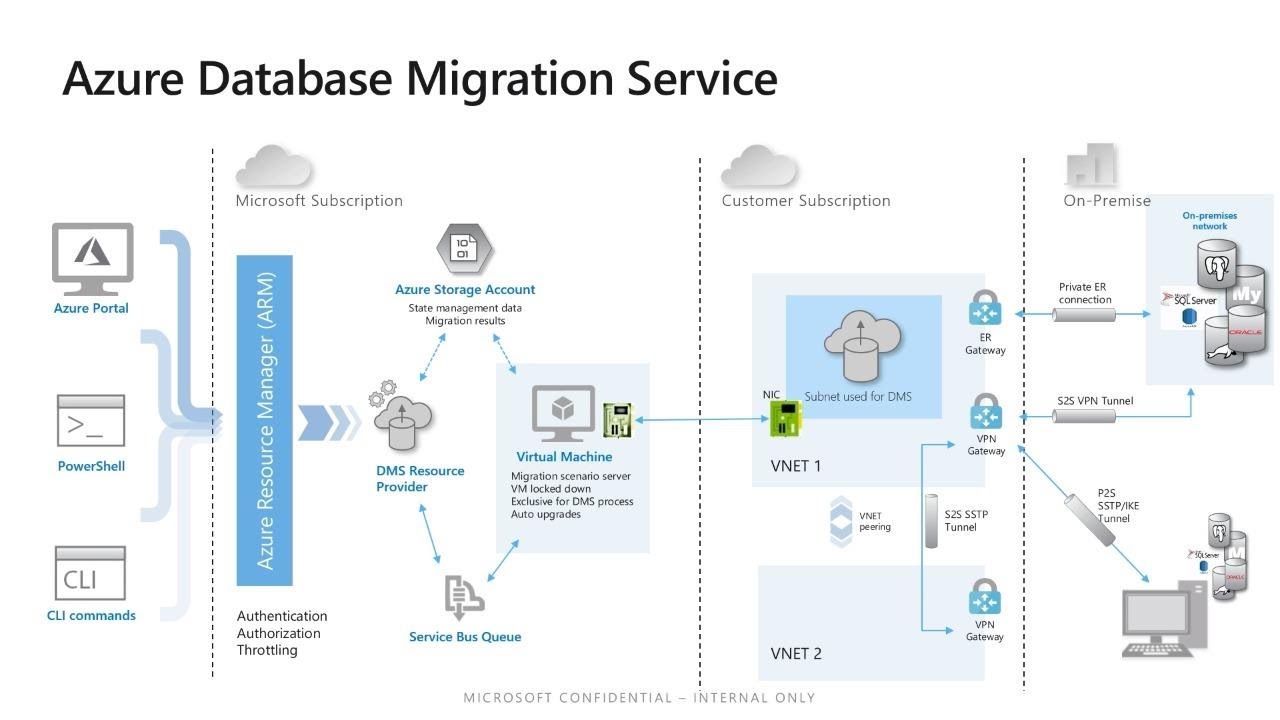

Method 5: Data Warehouses

Data warehouses are centralized repositories designed to store and manage large volumes of data from multiple sources. They are commonly used for business intelligence and analytics. Here’s an overview:

Step 1: Choose a Data Warehouse

- Data Warehouse Selection: Select a data warehouse solution that aligns with your organization’s needs and budget. Popular options include Amazon Redshift, Google BigQuery, and Microsoft Azure Synapse Analytics.

- Consider Scalability: Data warehouses should be able to handle large datasets and complex queries efficiently.

Step 2: Load Data into the Warehouse

- Data Ingestion: Use the data warehouse’s tools or APIs to load data from various sources, such as databases, files, or APIs.

- ETL Process: Implement an Extract, Transform, and Load (ETL) process to ensure data consistency and quality.

Step 3: Query and Analyze Data

- SQL Queries: Data warehouses are typically queried using SQL. Write optimized queries to retrieve the required data.

- Business Intelligence: Leverage the data warehouse’s features for advanced analytics, reporting, and visualization.

Method 6: Cloud Storage Services

Cloud storage services, such as Amazon S3, Google Cloud Storage, or Microsoft Azure Blob Storage, offer scalable and cost-effective solutions for storing and accessing large datasets. Here’s how to load data from cloud storage:

Step 1: Choose a Cloud Storage Service

- Cloud Provider Selection: Select a cloud storage service that aligns with your organization’s infrastructure and requirements.

- Consider Security: Ensure the chosen service provides adequate security measures for your data.

Step 2: Upload Data to Cloud Storage

- Data Upload: Use the cloud storage provider’s tools or APIs to upload your data files. This can be done via the web interface or programmatically.

- File Organization: Organize your data files in a structured manner to facilitate easy access and retrieval.

Step 3: Load Data into Your Application

- Cloud Access: Use the cloud storage provider’s SDK or API to access your data files from your application.

- Data Download: Download the required data files or use streaming capabilities to process the data directly from the cloud.

Method 7: Data Streams

Data streams are continuous flows of data, often generated in real-time by sensors, devices, or applications. Loading data from streams requires specialized techniques and tools. Here’s an introduction:

Step 1: Identify Data Stream Sources

- Stream Sources: Identify the sources of your data streams, such as IoT devices, social media platforms, or real-time sensors.

- Stream Protocols: Understand the protocols and formats used by these sources to transmit data.

Step 2: Capture and Process Data Streams

- Stream Processing: Use stream processing frameworks like Apache Kafka, Apache Flink, or Apache Storm to capture and process data streams in real-time.

- Data Analysis: Perform real-time analytics on the streaming data to extract valuable insights.

Step 3: Store and Analyze Streamed Data

- Data Storage: Decide on the appropriate data storage solution for your streamed data, considering factors like scalability, retention, and query performance.

- Data Analysis: Leverage the stored data for historical analysis and trend identification.

Conclusion

Loading data efficiently is a critical skill for data professionals, and the seven methods outlined in this guide offer a comprehensive toolkit for various data loading scenarios. Whether you’re working with databases, files, APIs, or real-time data streams, choosing the right method and optimizing your data loading process can greatly impact the success of your projects. Remember to consider factors like data volume, data structure, and the specific requirements of your analysis or application when selecting a data loading approach.

📝 Note: The methods presented here are just a starting point. Depending on your project's unique needs, you may need to explore additional techniques or combine multiple methods for optimal results.

FAQ

What is the most common method for loading data?

+CSV and Excel files are often the most common and accessible methods for loading data. They are widely supported and easy to work with.

How do I choose between a database and an API for data loading?

+Consider the scale of your data and the frequency of updates. Databases are better for large-scale, structured data, while APIs are ideal for real-time, dynamic data retrieval.

Can I load data from multiple sources simultaneously?

+Yes, you can load data from multiple sources using techniques like data integration or ETL processes. This allows you to combine data from various databases, files, or APIs.

What are the benefits of using a data warehouse for data loading?

+Data warehouses provide a centralized location for data storage and analysis, offering scalability, efficient querying, and advanced analytics capabilities.